Computational fluid dynamics

| Computational physics | ||||||||

|

||||||||

| Numerical analysis · Simulation Data analysis · Visualization

|

||||||||

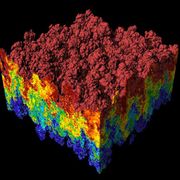

Computational fluid dynamics (CFD) is one of the branches of fluid mechanics that uses numerical methods and algorithms to solve and analyze problems that involve fluid flows. Computers are used to perform the millions of calculations required to simulate the interaction of liquids and gases with surfaces defined by boundary conditions. Even with high-speed supercomputers only approximate solutions can be achieved in many cases. Ongoing research, however, may yield software that improves the accuracy and speed of complex simulation scenarios such as transonic or turbulent flows. Initial validation of such software is often performed using a wind tunnel with the final validation coming in flight tests.

Contents |

Background and history

_Mach_7_computational_fluid_dynamic_(CFD).jpg)

The fundamental basis of almost all CFD problems are the Navier–Stokes equations, which define any single-phase fluid flow. These equations can be simplified by removing terms describing viscosity to yield the Euler equations. Further simplification, by removing terms describing vorticity yields the full potential equations. Finally, these equations can be linearized to yield the linearized potential equations.

Historically, methods were first developed to solve the Linearized Potential equations. Two-dimensional methods, using conformal transformations of the flow about a cylinder to the flow about an airfoil were developed in the 1930s.[1] The computer power available paced development of three-dimensional methods. The first paper on a practical three-dimensional method to solve the linearized potential equations was published by John Hess and A.M.O. Smith of Douglas Aircraft in 1967.[2] This method discretized the surface of the geometry with panels, giving rise to this class of programs being called Panel Methods. Their method itself was simplified, in that it did not include lifting flows and hence was mainly applied to ship hulls and aircraft fuselages. The first lifting Panel Code (A230) was described in a paper written by Paul Rubbert and Gary Saaris of Boeing Aircraft in 1968. In time, more advanced three-dimensional Panel Codes were developed at Boeing (PANAIR, A502), Lockheed (Quadpan), Douglas (HESS), McDonnell Aircraft (MACAERO), NASA (PMARC) and Analytical Methods (WBAERO, USAERO and VSAERO). Some (PANAIR, HESS and MACAERO) were higher order codes, using higher order distributions of surface singularities, while others (Quadpan, PMARC, USAERO and VSAERO) used single singularities on each surface panel. The advantage of the lower order codes was that they ran much faster on the computers of the time. Today, VSAERO has grown to be a multi-order code and is the most widely used program of this class. It has been used in the development of many submarines, surface ships, automobiles, helicopters , aircraft, and more recently wind turbines. Its sister code, USAERO is an unsteady panel method that has also been used for modeling such things as high speed trains and racing yachts. The NASA PMARC code from an early version of VSAERO and a derivative of PMARC, named CMARC, is also commercially available.

In the two-dimensional realm, quite a number of Panel Codes have been developed for airfoil analysis and design. These codes typically have a boundary layer analysis included, so that viscous effects can be modeled. Professor Richard Eppler of the University of Stuttgart developed the PROFIL code, partly with NASA funding, which became available in the early 1980s. This was soon followed by MIT Professor Mark Drela's XFOIL code. Both PROFIL and XFOIL incorporate two-dimensional panel codes, with coupled boundary layer codes for airfoil analysis work. PROFIL uses a conformal transformation method for inverse airfoil design, while XFOIL has both a conformal transformation and an inverse panel method for airfoil design. Both codes are widely used.

An intermediate step between Panel Codes and Full Potential codes were codes that used the Transonic Small Disturbance equations. In particular, the three-dimensional WIBCO code, developed by Charlie Boppe of Grumman Aircraft in the early 1980s has seen heavy use.

Developers next turned to Full Potential codes, as panel methods could not calculate the non-linear flow present at transonic speeds. The first description of a means of using the Full Potential equations was published by Earll Murman and Julian Cole of Boeing in 1970. Frances Bauer, Paul Garabedian and David Korn of the Courant Institute at New York University (NYU) wrote a series of two-dimensional Full Potential airfoil codes that were widely used, the most important being named Program H. A further growth of Program H was developed by Bob Melnik and his group at Grumman Aerospace as Grumfoil. Antony Jameson, originally at Grumman Aircraft and the Courant Institute of NYU, worked with David Caughey to develop the important three-dimensional Full Potential code FLO22 in 1975. Many Full Potential codes emerged after this, culminating in Boeing's Tranair (A633) code, which still sees heavy use.

The next step was the Euler equations, which promised to provide more accurate solutions of transonic flows. The methodology used by Jameson in his three-dimensional FLO57 code (1981) was used by others to produce such programs as Lockheed's TEAM program and IAI/Analytical Methods' MGAERO program. MGAERO is unique in being a structured cartesian mesh code, while most other such codes use structured body-fitted grids (with the exception of NASA's highly successful CART3D code, Lockheed's SPLITFLOW code and Georgia Tech's NASCART-GT).[3] Antony Jameson also developed the three-dimensional AIRPLANE code (1985) which made use of unstructured tetrahedral grids.

In the two-dimensional realm, Mark Drela and Michael Giles, then graduate students at MIT, developed the ISES Euler program (actually a suite of programs) for airfoil design and analysis. This code first became available in 1986 and has been further developed to design, analyze and optimize single or multi-element airfoils, as the MSES program. MSES sees wide use throughout the world. A derivative of MSES, for the design and analysis of airfoils in a cascade, is MISES, developed by Harold "Guppy" Youngren while he was a graduate student at MIT.

The Navier–Stokes equations were the ultimate target of developers. Two-dimensional codes, such as NASA Ames' ARC2D code first emerged. A number of three-dimensional codes were developed (OVERFLOW, CFL3D are two successful NASA contributions), leading to numerous commercial packages.

Technicalities

The most fundamental consideration in CFD is how one treats a continuous fluid in a discretized fashion on a computer. One method is to discretize the spatial domain into small cells to form a volume mesh or grid, and then apply a suitable algorithm to solve the equations of motion (Euler equations for inviscid, and Navier–Stokes equations for viscous flow). In addition, such a mesh can be either irregular (for instance consisting of triangles in 2D, or pyramidal solids in 3D) or regular; the distinguishing characteristic of the former is that each cell must be stored separately in memory. Where shocks or discontinuities are present, high resolution schemes such as Total Variation Diminishing (TVD), Flux Corrected Transport (FCT), Essentially NonOscillatory (ENO), or MUSCL schemes are needed to avoid spurious oscillations (Gibbs phenomenon) in the solution.

If one chooses not to proceed with a mesh-based method, a number of alternatives exist, notably :

- Smoothed particle hydrodynamics (SPH), a Lagrangian method of solving fluid problems,

- Spectral methods, a technique where the equations are projected onto basis functions like the spherical harmonics and Chebyshev polynomials,

- Lattice Boltzmann methods (LBM), which simulate an equivalent mesoscopic system on a Cartesian grid, instead of solving the macroscopic system (or the real microscopic physics).

It is possible to directly solve the Navier–Stokes equations for laminar flows and for turbulent flows when all of the relevant length scales can be resolved by the grid (a Direct numerical simulation). In general however, the range of length scales appropriate to the problem is larger than even today's massively parallel computers can model. In these cases, turbulent flow simulations require the introduction of a turbulence model. Large eddy simulations (LES) and the Reynolds-averaged Navier–Stokes equations (RANS) formulation, with the k-ε model or the Reynolds stress model, are two techniques for dealing with these scales.

In many instances, other equations are solved simultaneously with the Navier–Stokes equations. These other equations can include those describing species concentration (mass transfer), chemical reactions, heat transfer, etc. More advanced codes allow the simulation of more complex cases involving multi-phase flows (e.g. liquid/gas, solid/gas, liquid/solid), non-Newtonian fluids (such as blood), or chemically reacting flows (such as combustion).

Methodology

In all of these approaches the same basic procedure is followed.

- During preprocessing

- The geometry (physical bounds) of the problem is defined.

- The volume occupied by the fluid is divided into discrete cells (the mesh). The mesh may be uniform or non uniform.

- The physical modeling is defined – for example, the equations of motions + enthalpy + radiation + species conservation

- Boundary conditions are defined. This involves specifying the fluid behaviour and properties at the boundaries of the problem. For transient problems, the initial conditions are also defined.

- The simulation is started and the equations are solved iteratively as a steady-state or transient.

- Finally a postprocessor is used for the analysis and visualization of the resulting solution.

Discretization methods

The stability of the chosen discretization is generally established numerically rather than analytically as with simple linear problems. Special care must also be taken to ensure that the discretization handles discontinuous solutions gracefully. The Euler equations and Navier–Stokes equations both admit shocks, and contact surfaces.

Some of the discretization methods being used are:

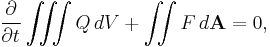

- Finite volume method (FVM). This is the "classical" or standard approach used most often in commercial software and research codes. The governing equations are solved on discrete control volumes. FVM recasts the PDE's (Partial Differential Equations) of the N-S equation in the conservative form and then discretize this equation. This guarantees the conservation of fluxes through a particular control volume. Though the overall solution will be conservative in nature there is no guarantee that it is the actual solution. Moreover this method is sensitive to distorted elements which can prevent convergence if such elements are in critical flow regions. This integration approach yields a method that is inherently conservative (i.e. quantities such as density remain physically meaningful):

-

- where

is the vector of conserved variables,

is the vector of conserved variables,  is the vector of fluxes (see Euler equations or Navier–Stokes equations),

is the vector of fluxes (see Euler equations or Navier–Stokes equations),  is the cell volume, and

is the cell volume, and  is the cell surface area.

is the cell surface area.

- Finite element method (FEM). This method is popular for structural analysis of solids, but is also applicable to fluids. The FEM formulation requires, however, special care to ensure a conservative solution. The FEM formulation has been adapted for use with the Navier–Stokes equations. Although in FEM conservation has to be taken care of, it is much more stable than the FVM. approach[4] Consequently it is the new direction in which CFD is moving. Generally stability/robustness of the solution is better in FEM though for some cases it might take more memory than FVM methods.[5]

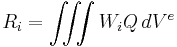

- In this method, a weighted residual equation is formed:

- where

is the equation residual at an element vertex

is the equation residual at an element vertex  ,

,  is the conservation equation expressed on an element basis,

is the conservation equation expressed on an element basis,  is the weight factor and

is the weight factor and  is the volume of the element.

is the volume of the element.

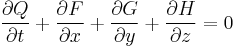

- Finite difference method. This method has historical importance and is simple to program. It is currently only used in few specialized codes. Modern finite difference codes make use of an embedded boundary for handling complex geometries making these codes highly efficient and accurate. Other ways to handle geometries are using overlapping-grids, where the solution is interpolated across each grid.

-

- Where

is the vector of conserved variables, and

is the vector of conserved variables, and  ,

,  , and

, and  are the fluxes in the

are the fluxes in the  ,

,  , and

, and  directions respectively.

directions respectively.

- Boundary element method. The boundary occupied by the fluid is divided into surface mesh.

- High-resolution schemes are used where shocks or discontinuities are present. To capture sharp changes in the solution requires the use of second or higher order numerical schemes that do not introduce spurious oscillations. This usually necessitates the application of flux limiters to ensure that the solution is total variation diminishing.

Turbulence models

Turbulent flow produces fluid interaction at a large range of length scales. This problem means that it is required that for a turbulent flow regime calculations must attempt to take this into account by modifying the Navier–Stokes equations. Failure to do so may result in an unsteady simulation. When solving the turbulence model there exists a trade-off between accuracy and speed of computation.

Direct numerical simulation

Direct numerical simulation (DNS) captures all of the relevant scales of turbulent motion, so no model is needed for the smallest scales. This approach is extremely expensive, if not intractable, for complex problems on modern computing machines, hence the need for models to represent the smallest scales of fluid motion.

Reynolds-averaged Navier–Stokes (RANS) equations are the oldest approach to turbulence modeling. An ensemble version of the governing equations is solved, which introduces new apparent stresses known as Reynolds stresses. This adds a second order tensor of unknowns for which various models can provide different levels of closure. It is a common misconception that the RANS equations do not apply to flows with a time-varying mean flow because these equations are 'time-averaged'. In fact, statistically unsteady (or non-stationary) flows can equally be treated. This is sometimes referred to as URANS. There is nothing inherent in Reynolds averaging to preclude this, but the turbulence models used to close the equations are valid only as long as the time over which these changes in the mean occur is large compared to the time scales of the turbulent motion containing most of the energy.

RANS models can be divided into two broad approaches:

- Boussinesq hypothesis

- This method involves using an algebraic equation for the Reynolds stresses which include determining the turbulent viscosity, and depending on the level of sophistication of the model, solving transport equations for determining the turbulent kinetic energy and dissipation. Models include k-ε (Spalding), Mixing Length Model (Prandtl) and Zero Equation (Chen). The models available in this approach are often referred to by the number of transport equations they include, for example the Mixing Length model is a "Zero Equation" model because no transport equations are solved, and the k-ε on the other hand is a "Two Equation" model because two transport equations are solved.

- Reynolds stress model (RSM)

- This approach attempts to actually solve transport equations for the Reynolds stresses. This means introduction of several transport equations for all the Reynolds stresses and hence this approach is much more costly in CPU effort.

Large eddy simulation

Large eddy simulations (LES) is a technique in which the smaller eddies are filtered and are modeled using a sub-grid scale model, while the larger energy carrying eddies are simulated. This method generally requires a more refined mesh than a RANS model, but a far coarser mesh than a DNS solution.

Detached eddy simulation

Detached eddy simulations (DES) is a modification of a RANS model in which the model switches to a subgrid scale formulation in regions fine enough for LES calculations. Regions near solid boundaries and where the turbulent length scale is less than the maximum grid dimension are assigned the RANS mode of solution. As the turbulent length scale exceeds the grid dimension, the regions are solved using the LES mode. Therefore the grid resolution for DES is not as demanding as pure LES, thereby considerably cutting down the cost of the computation. Though DES was initially formulated for the Spalart-Allmaras model (Spalart et al., 1997), it can be implemented with other RANS models (Strelets, 2001), by appropriately modifying the length scale which is explicitly or implicitly involved in the RANS model. So while Spalart-Allmaras model based DES acts as LES with a wall model, DES based on other models (like two equation models) behave as a hybrid RANS-LES model. Grid generation is more complicated than for a simple RANS or LES case due to the RANS-LES switch. DES is a non-zonal approach and provides a single smooth velocity field across the RANS and the LES regions of the solutions.

Vortex method

The Vortex method is a grid-free technique for the simulation of turbulent flows. It uses vortices as the computational elements, mimicking the physical structures in turbulence. Vortex methods were developed as a grid-free methodology that would not be limited by the fundamental smoothing effects associated with grid-based methods. To be practical, however, vortex methods require means for rapidly computing velocities from the vortex elements – in other words they require the solution to a particular form of the N-body problem (in which the motion of N objects is tied to their mutual influences). A long-sought breakthrough came in the late 1980’s with the development of the Fast Multipole Method (FMM), an algorithm by V. Rokhlin (Yale) and L. Greengard (Courant Institute) that has been heralded as one of the top ten advances in numerical science of the 20th century. This breakthrough paved the way to practical computation of the velocities from the vortex elements and is the basis of successful algorithms.

Software based on the Vortex method offer the engineer a new means for solving tough fluid dynamics problems with minimal user intervention. All that is required is specification of problem geometry and setting of boundary and initial conditions. Among the significant advantages of this modern technology;

- It is practically grid-free, thus eliminating numerous iterations associated with RANS and LES.

- All problems are treated identically. No modeling or calibration inputs are required.

- Time-series simulations, which are crucial for correct analysis of acoustics, are possible.

- The small scale and large scale are accurately simulated at the same time.

Vorticity Confinement method

The Vorticity Confinement method (VC) is an Eulerian technique, well known for the simulation of turbulent wakes. It uses a solitary-wave like approach to produce stable solution with no numerical spreading. VC can capture the small scale features to over as few as 2 grid cells. Within these features, a nonlinear difference equation is solved as opposed to finite difference equation. VC is similar to shock capturing methods, where conservation laws are satisfied, so that the essential integral quantities are accurately computed.

Two phase flow

The modeling of two-phase flow is still under development. Different methods have been proposed. The Volume of fluid method gets a lot of attention lately, but the Level set method and front tracking are also valuable approaches. Most of these methods are either good in maintaining a sharp interface or at conserving mass. This is crucial since the evaluation of the density, viscosity and surface tension in based on the values averaged over the interface.

Solution algorithms

Discretization in space produces a system of ordinary differential equations for unsteady problems and algebraic equations for steady problems. Implicit or semi-implicit methods are generally used to integrate the ordinary differential equations, producing a system of (usually) nonlinear algebraic equations. Applying a Newton or Picard iteration produces a system of linear equations which is nonsymmetric in the presence of advection and indefinite in the presence of incompressibility. Such systems, particularly in 3D, are frequently too large for direct solvers, so iterative methods are used, either stationary methods such as successive overrelaxation or Krylov subspace methods. Krylov methods such as GMRES, typically used with preconditioning, operate by minimizing the residual over successive subspaces generated by the preconditioned operator.

Multigrid is especially popular, both as a solver and as a preconditioner, due to its asymptotically optimal performance on many problems. Traditional solvers and preconditioners are effective at reducing high-frequency components of the residual, but low-frequency components typically require many iterations to reduce. By operating on multiple scales, multigrid reduces all components of the residual by similar factors, leading to a mesh-independent number of iterations.

For indefinite systems, preconditioners such as incomplete LU factorization, additive Schwarz, and multigrid perform poorly or fail entirely, so the problem structure must be used for effective preconditioning.[6] The traditional methods commonly used in CFD are the SIMPLE and Uzawa algorithms which exhibit mesh-dependent convergence rates, but recent advances based on block LU factorization combined with multigrid for the resulting definite systems, have led to preconditioners which deliver mesh-independent convergence rates.[7]

See also

- Blade element theory

- Finite element analysis

- Immersed boundary method

- List of finite element software packages

- Multidisciplinary design optimization

- Turbulence modeling

- Visualization

- Wind tunnel

Notes

- ↑ Milne-Thomson, L.M. (1973). Theoretical Aerodynamics. Dover Publications. ISBN 048661980X.

- ↑ Hess, J.L.; A.M.O. Smith (1967). "Calculation of Potential Flow About Arbitrary Bodies". Progress in Aeronautics Sciences 8: 1–138. doi:10.1016/0376-0421(67)90003-6. http://www.sciencedirect.com/science?_ob=ArticleURL&_udi=B6V3V-4811PYT-33&_user=10&_coverDate=12%2F31%2F1967&_alid=1339703692&_rdoc=1&_fmt=high&_orig=search&_cdi=5740&_docanchor=&view=c&_ct=176&_acct=C000050221&_version=1&_urlVersion=0&_userid=10&md5=2939d59a5c94a28fcc51ab6d6cec0305.

- ↑ "NASCART". Daniel Guggenheim School of Aerospace Engineering. http://www.ae.gatech.edu/people/sruffin/nascart/. Retrieved 2007-07-28.

- ↑ http://www3.interscience.wiley.com/journal/112692130/abstract?CRETRY=1&SRETRY=0

- ↑ Huebner, K. H., Thornton, E. A., and Byron, T. D., The Finite Element Method for Engineers, 3rd ed., Wiley Interscience(1995).

- ↑ Benzi, Golub, Liesen: "Numerical solution of saddle-point problems", Acta Numerica, 2005.

- ↑ Elman et al.: "A taxonomy and comparison of parallel block multi-level preconditioners for the incompressible Navier–Stokes equations", Journal of Computational Physics, vol. 227, 2008.

References

- Anderson, John D. (1995), Computational Fluid Dynamics: The Basics With Applications, Science/Engineering/Math, McGraw-Hill Science, ISBN 0070016852

- Patankar, Suhas (1980), Numerical Heat Transfer and Fluid Flow, Hemisphere Series on Computational Methods in Mechanics and Thermal Science, Taylor & Francis, ISBN 0891165223

- Shah, Tasneem M.; Sadaf Siddiq, Zafar U. Koreshi. "An analysis and comparison of tube natural frequency modes with fluctuating force frequency from the thermal cross-flow fluid in 300 MWe PWR.". International Journal of Engineering and Technology 9 (9): 201-205. http://docs.google.com/viewer?a=v&q=cache:4gQM2Vj9itIJ:www.ijens.org/1936091%2520IJET.pdf+tasneem+M.+Shah&hl=en&gl=us&pid=bl&srcid=ADGEESg-lxYkvf5xfu4Nv6UfF-xgO2yzh2O6wa7EzNVurfVgtXdi_bkvvSAijrk7DvwDqWJE9Ke-trzYKGEM5GCvi5w-qmbo5nvDaGt4kv-mBr-XL6in2xo0WRyHVX3o4roKLkcZgTAk&sig=AHIEtbQ4XElNn3nmOsUXoM0JdkNeY9cMxg.

External links

- CFD Tutorial Many examples and images, with references to robotic fish.

- CFD-Wiki

- Course: Introduction to CFD – Dmitri Kuzmin (Dortmund University of Technology)